Applying Machine Learning to Make the Difficult Simple Google Making Major Headway in the Machine...

Machine Learning: A Brief Overview

“The question of whether a computer can think is no more complicated than the question of whether a submarine can swim.”

-Edsger W. Djikstra

Overview

Machine Learning is, at its core, a set of processes that a computer can use to “learn” information, without ever being explicitly programmed to do so. It uses statistical modeling to perform various user-defined functions with the data that you give to the system, and then uses the information gleaned from those models to make predictions on future data.

Machine Learning operates primarily in one of two methodologies- supervised and unsupervised learning. To understand the difference between the two, let’s look at a couple of scenarios.

- It’s the first day of Kindergarten, and today you’re learning shapes! The teacher shows you a variety of pictures, and tells you “this one is a square, this one is a circle”, etc. They then ask you to look at some other pictures and put them into their proper categories based on what you just learned. You come across one picture that’s a little different than the ones you just saw but it’s more similar to a circle than a square, so you put it with the circles.

- We’re still in school, but a bit later. You’re now off to college, and things are a little unfamiliar. You try to find your way around, but nobody’s guiding you. You have an idea what to look for- you know that some rooms will have different things in them, so that’s probably a good place to start. Now it’s been a few days, you’ve poked around, settled in, and have gained a sense of what “normal” is. A fire starts in one of the buildings across the street, and you are immediately aware that this is pretty out of the ordinary, and not a day-to-day occurrence.

In the first example we see the general focus of supervised learning. A teacher (you, the end user) gives a set of labels for the student (your computer) to follow and the conditions that lead to each label. Your computer then gives a new set of data and, using what it learned in the training step, makes predictions about how this new data should fit. This is contrasted by unsupervised learning, in which a computer is given an unlabeled set of data and is set loose in order to discover patterns and make inferences using parameters for how to do so.

Use Cases

Machine Learning algorithms encompass a wide range of applications, from data security to financial forecasting. Being able to find new patterns and hidden insight in the wealth of machine data that organizations are collecting every day is an increasingly valuable tool for the coming age.

- Finance

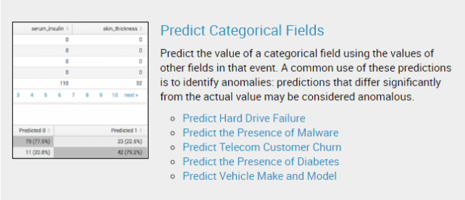

Like in our Kindergarten example above, one of the most common uses of machine learning is for data classification. Insurance companies could use a combination of customer’s credit score, driving habits, and court filings in order to separate them into a variety of risk categories, and then use that information to automatically predict which of these categories new users would fall into. Engineers at August Schell are even using Splunk’s Machine Learning toolkit to monitor their Bitcoin transactions at home.

- Security

Machine Learning is an incredibly strong tool for the increasingly complex data-center of the modern age. Algorithms can be implemented to detect network activity that matches the profile of previous malware attacks. Natural Language Processing (NLP) models can be used to analyze text data for evidence of planned threats on a network, as well.

- Automation and AI

Those same NLP models mentioned above have been used to create chatbots, using word sequences and probability models to generate new text data using previously existing corpuses. These models have only become more sophisticated over time, as more and more data becomes available to work with. Adobe is currently developing a product known as VoCo, which takes speech data from any user to generate new speech that mimics the original with incredible accuracy, even phrases that weren’t present in the original data. VoCo uses a neural network known as WaveNet, developed by the same company which used artificial intelligence to defeat Go champion Lee Sedol in 2015, to generate raw audio out of sampled speech. Google’s Machine Learning platform contains a Video Analysis API which can be used to search through videos and extract metadata, as well as perform annotation tasks. Their Image Recognition software can iterate through images, automatically tagging photos based on content that their Machine Learning models have learned to recognize and identify.

One of the biggest hurdles that had to be tackled in order to reach the current revolution was that of data availability. In order to work properly and accurately, many models require a few hundred data points just to get started. But with the increasing prevalence of Big Data, this barrier has been lowered immensely. Our learning models will only get more refined over time, as we use the hidden patterns and inferences we find in our data to tweak and improve them.

According to a 2013 article from IBM, 2.5 quintillion bytes of data are produced every day; roughly 250,000 times the size of the Print section of the Library of Congress. It’s about time we start making some of that work for us.

If you’re interested in implementing Machine Learning tools in your environment, you can reach out to August Schell at https://augustschell.com/contact-us/, or by phone at (301)-838-9470. Our team of engineers is prepared to answer your questions and guide you towards a successful deployment.